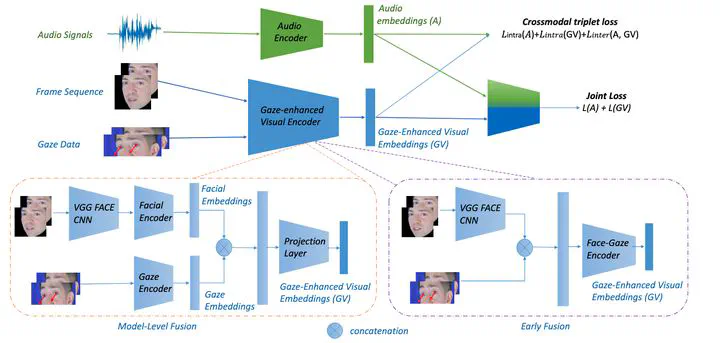

Gaze-enhanced Crossmodal Embeddings for Emotion Recognition

Image credit: Authors

Image credit: AuthorsAbstract

Emotional expressions are inherently multimodal – integrating facial behavior, speech, and gaze – but their automatic recognition is often limited to a single modality, e.g. speech during a phone call. While previous work proposed crossmodal emotion embeddings to improve monomodal recognition performance, despite its importance, an explicit representation of gaze was not included. We propose a new approach to emotion recognition that incorporates an explicit representation of gaze in a crossmodal emotion embedding framework. We show that our method outperforms the previous state of the art for both audio-only and video-only emotion classification on the popular One-Minute Gradual Emotion Recognition dataset. Furthermore, we report extensive ablation experiments and provide detailed insights into the performance of different state-of-the-art gaze representations and integration strategies. Our results not only underline the importance of gaze for emotion recognition but also demonstrate a practical and highly effective approach to leveraging gaze information for this task.

Type

Publication

In ETRA - ACM Symposium on Eye Tracking Research & Applications